The Convergence of Generative Intelligence and Spatial Computing

The Convergence of Generative Intelligence and Spatial Computing: Transforming Incremental Mechanics into Immersive Realities

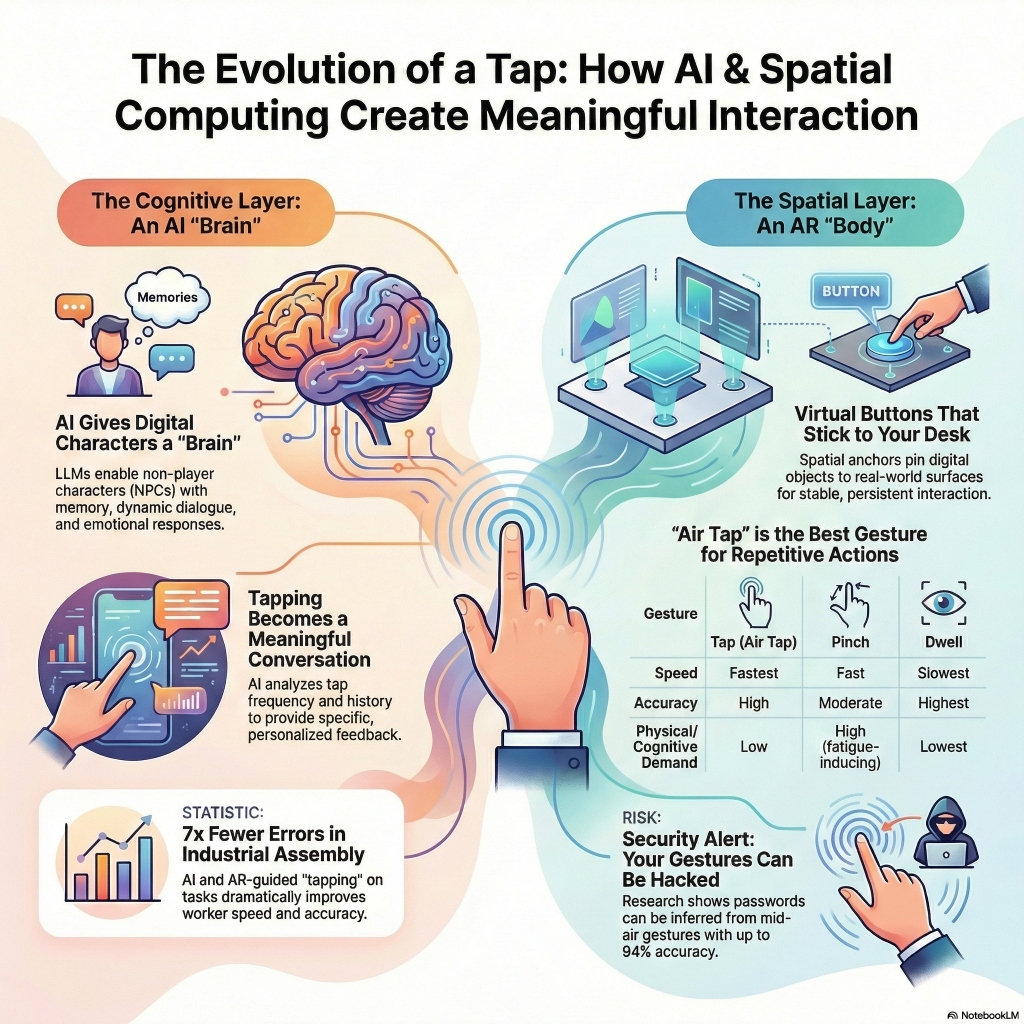

The evolution of human-computer interaction (HCI) is currently defined by a transition from static, two-dimensional interfaces toward dynamic, three-dimensional environments where digital and physical realities are inextricably linked. At the heart of this transformation is the enhancement of foundational interaction paradigms—most notably the “tapping” mechanic. Once a binary input localized to a physical screen, the tapping mechanic is being reinvented through the integration of Large Language Models (LLMs) and spatial computing.[1, 2, 3] This technological synthesis shifts the paradigm from deterministic software responses toward probabilistic, context-aware systems that adapt to user behavior in real-time. By leveraging the cognitive depth of generative AI and the environmental awareness of augmented reality (AR), developers are creating experiences where a simple tap on a “virtual red button” triggers complex, personalized narrative progressions and physically anchored environmental changes.[4, 5, 6]

The Cognitive Layer: Large Language Models and Smart NPC Integration

The traditional role of the non-player character (NPC) in gaming and simulations has been limited by the constraints of predefined scripts and decision trees. These “canned” responses often lead to immersion-breaking repetition, particularly in incremental games where the primary mechanic—tapping—is performed thousands of times.[7, 8] The introduction of LLMs addresses this limitation by providing NPCs with a “Character Brain” capable of dynamic dialogue generation, emotional modeling, and long-term memory.[9, 10]

Retrieval-Augmented Generation for Narrative Continuity

For an NPC to react intelligently to tapping progress, it must possess a consistent understanding of the game world and the user’s history. This is achieved through Retrieval-Augmented Generation (RAG). In this framework, the game’s narrative assets, lore, and current state are decomposed into text “chunks,” vectorized, and stored in a high-dimensional vector database.[1] When a user reaches a tapping milestone, the system performs a similarity search to retrieve the most relevant narrative context before generating a response.

The effectiveness of these models is evaluated across several dimensions, including noise robustness, information integration, and counterfactual robustness.[1] For instance, newer models like GPT-4 demonstrate superior performance in integrating disparate pieces of game-state data into a single coherent response, whereas models like Mixtral have shown high efficacy in “negative rejection”—the ability to ignore irrelevant or “noisy” inputs during the dialogue generation process.[1]

| Task Category | GPT-4 Performance | Mixtral Performance | Application to Tapping Mechanics |

|---|---|---|---|

| Noise Robustness | High | Moderate | Ignoring accidental taps or erratic input patterns. |

| Information Integration | Exceptional | High | Combining tap counts with story milestones. |

| Negative Rejection | High | High (specific configs) | Preventing the NPC from reacting to irrelevant user actions. |

| Counterfactual Robustness | High | Moderate | Maintaining lore consistency even if the user attempts to “trick” the AI. [1] |

The Goals and Actions Framework

To move beyond mere conversation, AI agents utilize “Goals and Actions” systems. In platforms such as Inworld AI, a “goal” acts as a consequence mechanism triggered by an activation event.[9] If the “activation” is defined as a specific tapping frequency or a milestone (e.g., the 1,000th tap), the NPC can execute an “action,” such as suggesting a new quest, changing its emotional state to “impressed,” or providing a unique promo code.[5, 9]

These actions are not strictly scripted. Instead, they utilize parameters that inject real-time client data into the LLM’s prompt at runtime. This allows an NPC to say, “I see you’ve tapped that button exactly 1,042 times in the last minute—your speed is remarkable!” rather than relying on a generic “Well done”.[9] This level of granularity in the feedback loop taps into the psychological principles of reinforcement, making the mundane act of tapping feel meaningful and acknowledged.[11]

The Spatial Layer: Augmented Reality and Environmental Persistence

Spatial computing extends the digital experience into the physical environment, allowing a “virtual red button” to be anchored to a user’s desk, floor, or wall.[2, 6] This requires the device to perform complex spatial mapping and feature detection to understand the geometry of the real world.[6]

Spatial Anchors and Feature Mapping

The core of AR interaction is the spatial anchor—a digital marker tied to stable visual features in the environment, such as corners, edges, or textures.[6] Without these anchors, a virtual button would “drift” or “float” away as the user moved their head or device, shattering the illusion of presence. Platforms like Apple ARKit and Google ARCore build a point cloud or feature map using the device’s camera and Inertial Measurement Unit (IMU).[6]

When a user taps a point in the physical world through their AR display, a process called “hit-testing” is initiated. The system casts a virtual ray from the camera to the detected surface and “drops” an anchor at the 3D coordinates where they intersect.[6]

| Platform | Anchor Type / API | Feature Set | Retention |

|---|---|---|---|

| Apple ARKit | ARPlaneAnchor | Detects flat surfaces (walls/floors). | Local session. |

| Google ARCore | HitResult.createAnchor() | Precise 3x4 transform matrix placement. | 24 hours (free) / 365 days (cloud). |

| Azure Spatial Anchors | 32-character Anchor ID | Cross-platform (iOS/Android/HoloLens). | Long-term cloud persistence. [6] |

The stability of these anchors is crucial for “tapping” interactions. In feature-scarce environments, such as a room with plain white walls and poor lighting, the system may struggle to triangulate its position, leading to scale drift or tracking failure.[6] Therefore, successful AR button implementation requires “textured” environments where the computer vision algorithms can identify unique landmarks.

Gesture Interaction: Air Tap vs. Pinch

In spatial computing, the traditional “tap” on glass is replaced by mid-air gestures. The selection of the appropriate gesture is critical for user experience, as different movements impose varying levels of physical and cognitive demand.[12, 13] Research comparing selection methods—Push, Tap, Dwell, and Pinch—reveals that the “Tap” gesture (a downward movement of the index finger) is the fastest and most accurate method, making it the ideal choice for high-frequency incremental mechanics.[12, 14]

| Gesture | Speed | Accuracy | Physical/Cognitive Demand |

|---|---|---|---|

| Tap (Air Tap) | Fastest | High | Low |

| Pinch | Fast | Moderate | High (fatigue-inducing) |

| Dwell | Slowest | Highest | Lowest |

| Push | Moderate | Moderate | Moderate [12, 14] |

While the “Pinch” gesture (touching the thumb and index finger) is common in distant interactions, it is often error-prone and can lead to “hand fatigue” during prolonged use.[14, 15] For a “tapping progress” mechanic, the Air Tap mimics the proprioceptive feedback of physical tapping, lowering the learning curve for users accustomed to touchscreens.[13]

Synthesis: Enhancing the Tapping Mechanic through Convergence

The intersection of LLMs and spatial computing creates a multi-modal feedback loop where the user’s physical actions (tapping) influence a generative digital world. This convergence is not merely about aesthetics; it is about “context awareness”—the ability of the game to understand where the player is, what they are doing, and how they are progressing.[4, 16]

Dynamic Difficulty and Behavioral Adaptation

Using real-time analytics, AI tools can track tapping frequency, accuracy, and timing to calibrate the game’s difficulty dynamically. This is known as Dynamic Difficulty Adjustment (DDA).[11] If a player consistently taps at a high speed, the AI can introduce faster-moving virtual targets or additional obstacles to prevent boredom. Conversely, if the system detects fatigue or erratic tapping patterns—signaling frustration—it can recalibrate the difficulty or trigger an NPC to offer encouragement or a “shortcut”.[11, 17]

This creates a “self-improving” game loop. As the user interacts with the virtual button, the AI agent learns the user’s “playstyle profile” and adapts the narrative and challenges accordingly.[4, 18, 19] For example, a player who taps rhythmically might be presented with a music-based challenge, while a player who taps in bursts might be given a “frenzy” power-up.[4, 11]

Case Study: Industrial and Task-Based Applications

The enhancement of tapping mechanics extends beyond gaming into industrial “pick-to-place” assembly and smart factory management.[20, 21] In these contexts, the “tapping” is a gesture used to confirm the selection of a part or the completion of a step.

Research into the “Gest-SAR” framework demonstrates the power of light-guided, gesture-based assembly.[20] In a study involving standardized assembly tasks (using Lego Duplo as a proxy for industrial components), the integration of SAR and gesture recognition significantly improved performance:

| Metric | Paper Manual | Tablet Instruction | Gest-SAR (Spatial + AI) |

|---|---|---|---|

| Avg. Cycle Time | 7.89 min | 6.99 min | 3.95 min |

| Error Rate | Baseline | Baseline | 7x Less than Paper |

| Cognitive Workload | High | Moderate | Low [20] |

This suggests that when a tapping mechanic is integrated into a spatial environment with real-time AI feedback, users experience a “deeper understanding of spatial relationships” and reduced cognitive load.[20, 22] The AI acts as a “proactive partner,” monitoring the user’s gestures and providing immediate visual or verbal correction if a “tap” is performed on the wrong component.[23, 24]

Psychological and UX Design Principles

To maximize the impact of AI and spatial computing on a simple tapping mechanic, developers must align the technology with human psychological needs. The “tapping” mechanic is fundamentally a loop of “action → reward”.[11]

Reinforcement and Immediate Rewards

Incremental games succeed because they provide instant gratification.[3, 11] By adding LLM-powered NPCs, this gratification becomes social. Instead of just a number increasing on a screen, the player receives a “well-timed” compliment from a companion character who remembers their previous achievements.[5, 10, 19] Research indicates that 43% of gamers would pay for games that include these AI-driven NPCs, as they enhance realism and create a sense of investment in the digital world.[19]

Multi-sensory Feedback and the “Internet of Senses”

The future of spatial tapping involves multi-sensory experiences that engage sight, sound, and touch.[16] Spatial audio—where the “click” sound of the virtual button comes from its exact 3D location—is essential for immersion.[2] Furthermore, advances in haptic feedback, such as ultrasonic haptics, allow users to “feel” the virtual button without wearing gloves.[12]

The “Internet of Senses” (IoS) concept suggests that AI will eventually manage and synchronize these interactions, creating environments that are “indistinguishable from reality”.[16] A tap on a virtual red button could trigger a localized vibration through a smart ring or a wearable haptic suit, providing the tactile confirmation that the brain expects from a physical object.[12, 13, 25]

Security, Privacy, and Technical Risks

While the convergence of these technologies offers significant benefits, it also introduces novel risks. The very sensors that enable spatial awareness and gesture recognition can be exploited to harvest sensitive user data.[4, 21]

Gesture Inference and Privacy

The recording of mid-air interactions poses a security risk, particularly for “virtual keyboards” or “password pads” used in smart factory management.[21, 26] Researchers have demonstrated that a single RGB camera can capture a user’s gestures and, with the aid of machine learning, estimate the password input on an intangible virtual panel with high accuracy.[21]

| Scenario | 2-Digit Accuracy | 4-Digit Accuracy | 6-Digit Accuracy |

|---|---|---|---|

| Known Password Length | 97.03% | 94.06% | 83.83% |

| Unknown Password Length | 85.50% | 76.15% | 77.89% [21] |

This “cyber-physical security” challenge necessitates the development of interfaces that are robust against shoulder-surfing and video-based inference.[21] Furthermore, because AR games collect real-world sensory and environmental information, developers must establish “robust guardrails” to ensure ethical data handling and user trust.[4]

Hallucinations and Inconsistency

In the context of AI NPCs, the risk of “hallucination”—where the LLM generates false or contradictory information—can break the game’s narrative logic.[1, 7, 10] If a player is tapping to build a medieval castle, but the NPC suddenly starts talking about “intergalactic spaceships,” the immersion is lost. To mitigate this, developers use “Contextual Mesh” layers that force the LLM to operate within the fantasy and logic of the game world, effectively filtering out “off-lore” content.[10]

Conclusion: The Future of Incremental Interaction

The enhancement of a simple tapping mechanic through Large Language Models and spatial computing represents a shift from “busywork” to “meaningful interaction”.[23] By integrating “Smart NPCs” that possess memory and emotion, developers can turn a repetitive task into an evolving story.[5, 9, 19] Simultaneously, spatial computing liberates these interactions from the screen, allowing users to engage with their physical environment in intuitive and sensory-rich ways.[2, 6]

As these technologies continue to mature, the line between imagination and reality will “blur even further”.[4] The simple act of tapping a virtual red button will serve as a gateway to hyper-personalized, context-aware digital universes that learn, adapt, and grow alongside the user.[4, 19] The challenge for the next generation of designers and engineers is to balance this immense creative potential with the technical rigor and ethical oversight required to build safe, immersive, and truly responsive spatial environments.[1, 4, 11]

Part I: The Cognitive Architecture of AI-Driven Interactivity

The transformation of the tapping mechanic begins with the software’s ability to “think” about the user’s input. In a standard clicker game, a tap is an event that increments a variable (x=x+1). In an AI-enhanced environment, a tap is a data point in a temporal behavioral profile.[27]

Large Language Models as Behavioral Analysts

LLMs do not merely generate text; they act as reasoning engines. When a user taps a virtual button, the game client sends a “heartbeat” to the LLM-powered agent. This heartbeat contains metadata: the timestamp, the tap pressure (if supported by the hardware), the current game state, and the proximity of other virtual or physical objects.[2, 9]

The agent processes this using a “Self-Improving Feedback Loop”.[28, 29] The system asks: “Is the user tapping with increasing speed? Does this indicate excitement or frustration?” This involves an automated policy validator that blocks “unsafe” or “off-lore” reactions, ensuring the NPC remains within its character profile.[9, 28]

Mathematical Modeling of User Skill Learning (ACT-R)

To understand how a user “learns” a tapping mechanic, researchers use the Adaptive Control of Thought-Rational (ACT-R) architecture.[30] This computational model investigates skill transfer and motor mechanisms. At fast speeds (keypress rates < 500 ms), the human brain switches from “conscious” processing to “automatic” motor behavior.[30]

An AI agent can monitor these transitions. By analyzing the “periodicity” of tapping, the AI can determine when a user has mastered the mechanic. This is where the personalized dialogue becomes insightful. Instead of a generic “Good job,” the Smart NPC might remark, “I noticed your rhythm stabilized around the 500th tap—you’ve entered a state of flow, haven’t you?”.[11, 30]

Memory Systems and Emotional States

The “Character Brain” described in Snippet [10] is not a single model but an orchestration of multiple personality machine learning models.

- Episodic Memory Management: This layer records every milestone. If the user stops tapping for a week and then returns, the NPC doesn’t restart its dialogue. It uses its episodic memory to say, “Welcome back! The red button missed your touch. We were so close to the next power-up before you left”.[18, 19]

- The Emotions Engine: This engine tracks the “emotional temperature” of the interaction. Inworld’s engine, for example, assigns probability values (0 to 1) to specific emotional states like “Joy,” “Fear,” or “Sadness”.[9, 10]

- High Frequency Tapping → High Probability of “EXCITEMENT” → Dialogue Tone: Energetic.

- Low Accuracy/Missing the Button → High Probability of “CONCERN” → Dialogue Tone: Supportive.

This synergy between memory and emotion is what makes an NPC feel like a “living” part of the environment rather than a UI element.[4, 5, 19]

Part II: The Physics and Engineering of Spatial Interaction

Moving the tapping mechanic from the screen to the room requires the digitization of reality. This is not a simple visual overlay; it is a “Cyber-Physical” integration where the digital “button” must respect the laws of the physical world.[21, 31]

Spatial Mapping and Environment Reconstruction

A spatial computing device (like the HoloLens 2 or Apple Vision Pro) uses LiDAR and ToF (Time-of-Flight) sensors to “mesh” the room.[2, 32] This creates a 3D digital twin of the user’s environment. For a “virtual red button” to be placed on a desk, the system must first identify the desk as a “plane”.[2, 6]

The system uses SLAM (Simultaneous Localization and Mapping) to triangulate its position. As the user walks around the room, the device builds a “point cloud”—a collection of thousands of 3D points representing the surfaces in the room.[6]

Hit-Testing and 3x4 Transform Matrices

When the user “Air Taps” the desk to place the button, the system performs the following:

- Ray Casting: A ray is projected from the user’s “eye-gaze” or “hand-pointer” into the point cloud.[6]

- Intersection: The system finds the nearest mesh triangle that the ray intersects.

- Anchor Creation: An ARAnchor is created. Its position is stored as a 3x4 transform matrix, which contains the rotation (3x3) and the translation (1x3) relative to the room’s origin.[6]

This matrix ensures that no matter how the user moves, the button stays exactly at with the correct orientation.[6]

Occlusion and Realism

To make the “virtual red button” feel real, the system must support occlusion. This means that if the user moves a physical book in front of the virtual button, the button must “disappear” behind the book.[2] This requires real-time depth sensing and “depth-sorting” in the rendering engine (e.g., Unity’s RealityKit or URP).[2, 32]

AI plays a role here through “Machine Vision.” Advanced AI models can identify objects (e.g., “This is a coffee mug”) and predict their boundaries more accurately than raw depth sensors alone, leading to “cleaner” occlusion and more believable interactions.[2, 5]

Part III: The Social and Collaborative Future of Tapping

When tapping is enhanced by AI and spatial computing, it ceases to be a solo activity. Shared spatial maps and “Multi-user Synchronization” allow multiple people to interact with the same virtual red button in the same room.[6]

Collaborative Data Annotation and Shared Experiences

Frameworks like CASimIR facilitate online real-time multi-player games where participants “tap” in sync with a rhythm.[33] In a spatial environment, this could manifest as a “Digital Gymkhana” where a group of friends must find and tap a series of virtual buttons hidden throughout a city park.[34]

AI acts as the “referee” and “narrator” for these social games.

- Real-time Translation: LLMs can translate the “Smart NPC’s” dialogue for different players in real-time, allowing a global audience to participate in the same spatial experience.[4, 35, 36]

- Group Behavior Analysis: The AI monitors the collective tapping frequency of the group. If the group is moving too slowly, the AI NPC might say, “Come on team, you’re falling behind the leaderboard!”.[4, 37]

Gamification of the Mundane: From Chores to Quests

The “Tap-to-Earn” (T2E) model, currently popular in mobile gaming, is moving into the spatial world.[11] By using AR glasses, a mundane task like “sorting the mail” or “stocking shelves” can be transformed into a tapping game.

- Visual Guidance: AR overlays a virtual button on the “correct” shelf. The worker “taps” the virtual button once the physical item is placed.[20, 24, 38]

- Personalized Progress: The AI tracks the worker’s efficiency and provides a “personalized learning path,” suggesting faster techniques based on the worker’s unique movement patterns.[4]

This “gamification of productivity” improves task optimization and reduces burnout by introducing entertaining rewards and challenges into otherwise monotonous work.[34, 37, 39]

Part IV: Technical Architecture and Integration Workflows

For professional developers, the path to creating these experiences involves a specific stack of emerging technologies.[32, 40]

The Developer Stack

- Engines: Unity and Unreal Engine remain the primary platforms for 3D rendering and spatial logic.[2, 32]

- AI Orchestration: Inworld AI or Convai provide the “Character Brain” that connects to the LLM (GPT-4/Llama 3) via low-latency APIs.[5, 8, 10]

- Spatial SDKs:

- visionOS: For high-fidelity gaze and gesture interaction.[2]

- MRTK3: For “volumetric buttons” and “object manipulators” that work across different hardware.[2]

- Azure Spatial Anchors: For persistent, multi-user experiences in large-scale environments.[6, 31]

The “Staged Promotion” Memory Pattern

To ensure the AI agent improves without “destroying data workflows,” developers must treat the agent’s memory like a production pipeline.[18]

- Validation Gates: Before a new tapping “fact” (e.g., “The user loves clicking the button at 2 AM”) is added to the agent’s long-term semantic memory, it must pass a validation gate to ensure it doesn’t lead to faulty reasoning or hallucinations.[18]

- Staged Promotion: New “memories” are first tested in an isolated environment. Only if they improve “business outcomes” (e.g., player retention or task accuracy) are they promoted to the production agent.[18]

Part V: Ethical Frontiers and the “Human-in-the-Loop”

As we outsource our interaction logic to AI agents, the role of the human designer evolves from “creator” to “curator”.[7, 11, 41]

Authenticity vs. Automation

One of the primary concerns among the gaming community is that AI-generated content can feel “shallow” or “repetitive”.[7, 23, 41] A “Smart NPC” that only reacts to taps might eventually feel like “filler content”.[23]

- The Solution: Human writers should focus on the “character story and personality,” while the LLM handles the “real-time responses” to the player’s specific actions.[7, 35, 41]

- Hybrid Models: Combining small, specific LLMs (for speed and task accuracy) with large, general LLMs (for creative dialogue) ensures a balance between realism and computational efficiency.[1]

Privacy and the “Sovereign Home”

Spatial computing devices “record everything.” When a user places a virtual button in their home, the device “sees” their family, their furniture, and their private life.[4, 21]

- Ethical Data Usage: Developers must ensure transparency in how sensory and environmental data is used. Personalized AI should not “stalk” the user but rather “support” them.[4, 5]

- Local Processing: The move toward “on-device” LLMs and spatial processing is critical to ensuring that the user’s “spatial twin” remains their own.[35]

Summary: A New Era of Interactive Experience

The “simple tapping mechanic” is simple no longer. By infusing it with generative intelligence and spatial awareness, we have created a new medium of expression.

| Feature | Traditional Tapping | AI + Spatial Tapping |

|---|---|---|

| Interaction | 2D / Screen-based | 3D / Environment-based |

| Feedback | Visual/Sound (Static) | Personalized Dialogue (Dynamic) |

| Progress | Linear/Numerical | Narrative/Evolving |

| Presence | Passive | Immersive/Persistent [2, 4, 5, 6] |

The convergence of these technologies allows us to turn any physical surface into a digital interface, any “NPC” into a lifelong companion, and any “mundane task” into a heroic quest. This is the promise of the next generation of emerging technologies: a world where our digital and physical lives are unified into a single, intelligent, and responsive reality.

- Understanding LLMs for Game NPCs: An … - Diva-portal.org

- Spatial Computing Explained: Examples and Key Concepts | SCAND

- Tap Game

- Generative AI and the Future of Augmented Reality Gaming …

- AI NPCs and the future of gaming - Inworld AI

- What Are Spatial Anchors and Why They Matter - Qualium Systems

- Discussion: next generation of games using LLMs to power NPC dialogues - Reddit

- Inworld AI: My Deep Dive into the Future of Interactive Characters - Skywork.ai

- Introducing Goals and Actions 2.0 - Inworld AI

- Bringing Personality to Pixels, Inworld Levels Up Game Characters Using Generative AI

- How Does AI- Integration Enhance Tap to Earn Game Development? - Antier Solutions

- (PDF) Push, Tap, Dwell, and Pinch: Evaluation of Four Mid-air Selection Methods Augmented with Ultrasonic Haptic Feedback - ResearchGate

- What is Gesture-Based Interaction? | IxDF

- LeapBoard: Integrating a Leap Motion Controller with a Physical Keyboard for Gesture-Enhanced Interactions - York University

- At a Glance to Your Fingertips: Enabling Direct Manipulation of Distant Objects Through SightWarp - arXiv

- How AI is Enhancing Immersive Experiences - BrandXR

- The AI revolution in gaming: personalization, efficiency, and the bottom line - Phrase

- 7 Tips to Build Self-Improving AI Agents with Feedback Loops | Datagrid

- The case for adding AI agents to open world games - Inworld AI

- Gest-SAR: A Gesture-Controlled Spatial AR System for Interactive Manual Assembly Guidance with Real-Time Operational Feedback - MDPI

- A Case Study About Cybersecurity Risks in Mid-Air Interactions of Mixed Reality-Based Smart Manufacturing

- Effects of AR on Cognitive Processes: An Experimental Study on Object Manipulation, Eye-Tracking, and Behavior Observation in Design Education - NIH

- The future of play: How is AI reshaping game development and player experience?

- Top Gamification Trends for Boosting Learning Engagement - SHIFT eLearning

- Computing with Smart Rings: A Systematic Literature Review - arXiv

- “I Can See Your Password”: A Case Study About Cybersecurity Risks in Mid-Air Interactions of Mixed Reality-Based Smart Manufacturing Applications | J. Comput. Inf. Sci. Eng. | ASME Digital Collection

- Modernizing Learning: Building the Future Learning Ecosystem - ADL

- AI Agent Feedback Loops | Faster learning, safer output - The Pedowitz Group

- Creating a Feedback Loop: Integrating User Insights into AI Agent Development - Medium

- Computational & neural investigation of skill learning across speeds in video games - Amazon S3

- Spatial Computing and Intuitive Interaction: Bringing Mixed Reality and Robotics Together - Microsoft

- XR/AR/VR & spatial computing platforms/tools: Industry Primer - Umbrex

- (PDF) Creating audio based experiments as social Web games with the CASimIR framework

- ▷ What is Gamification and its benefits - Imascono

- Using LLMs for Game Dialogue: Smarter NPC Interactions - Pyxidis

- The convergence of AI and immersive environments | Capgemini

- The Future Of Gamification In Corporate Environments: Transforming Business Strategies

- Immersive Learning: How AR and VR Are Transforming Training - Ignite HCM

- 11 Gamification Examples for Retail - Marketingblatt

- The Trillion Dollar AI Software Development Stack | Andreessen Horowitz

- Generative AI in Game Design: Enhancing Creativity or Constraining Innovation? - MDPI